Hey there! If you’re building a mobile app, you’re probably already juggling a ton of tasks—designing the UI, coding the backend, making sure everything works smoothly. But what if I told you that AI tools could make all of that easier? From speeding up development to improving the overall user experience, AI is like the secret sauce every developer needs today.

Think about it: instead of manually writing lines of code for every single task, you could use AI tools to handle a lot of the heavy lifting. Imagine reducing hours or even days of development time by automating things like image recognition, text translation, or app optimization. Sounds like a dream, right? Well, it’s happening now. In this article, I’m going to introduce you to the best AI tools for mobile app development that can help you turn that dream into reality.

10 Best AI Tools for Mobile App Development

In this section, I’ll introduce you to the top 10 AI tools for mobile app development, each with its own unique set of features. Whether you’re building for Android or iOS (or even both), these tools are designed to help you move faster, create better, and deliver apps that users will love.

Here’s a summary table of the AI tools for mobile app development, grouped by categories:

| Category | Tool | Platform / Notes |

| ML Framework / Runtime | TensorFlow Lite | Cross-platform (Android, iOS). Optimized TensorFlow models for mobile. |

| ML Framework / Runtime | Core ML | iOS/macOS. Apple’s ML runtime for on-device inference. |

| ML Framework / Runtime | PyTorch Mobile | Cross-platform. Mobile runtime for PyTorch models. |

| ML Framework / Runtime | ONNX Runtime Mobile | Cross-platform. Runs ONNX models efficiently on mobile devices. |

| SDK / API Toolkit | Google ML Kit | Android & iOS. Prebuilt SDK for text recognition, vision, face detection, etc. |

| SDK / API Toolkit | MediaPipe | Cross-platform. Vision and multimodal ML (pose, face, hands). |

| Platform / Training Tool | Edge Impulse | Platform for TinyML and edge AI model deployment. |

| Model Training / Conversion | Create ML | macOS. Train custom models for Core ML apps. |

| NLP / Transformers | Hugging Face Transformers (mobile-friendly) | Pretrained NLP models, deployable with TFLite or Core ML. |

| NLP / Tiny Models | BERT Mobile / TinyML Implementations | Lightweight BERT/TinyML models optimized for mobile and embedded devices. |

1. TensorFlow Lite

What it is:

It is TensorFlow Lite that lies behind the lightweight machine learning, which Google has specifically produced for mobile phones and embedded systems. It allows you to integrate AI features into your apps without overwhelming your resources.

Key features:

- On-device inference: Everything runs locally, meaning quicker responses and better privacy since data doesn’t need to be sent to the cloud.

- Low latency: Ideal for tasks like image recognition or speech processing, where speed is essential.

- Cross-platform support: Works across Android, iOS, embedded Linux, and microcontrollers, making it flexible for various platforms.

- Optimized for hardware acceleration: Uses GPU and iOS Core ML to make AI even faster.

- Model optimization: Includes tools to reduce model size and quantize data, making it easier to deploy on mobile devices.

Pricing:

TensorFlow Lite is completely free, which makes it accessible for developers of all experience levels.

Use case:

I used TensorFlow Lite in an app to add real-time image recognition. The results were fast, smooth, and seamless, with no lag whatsoever. If your app needs to process data or images quickly, this tool is essential.

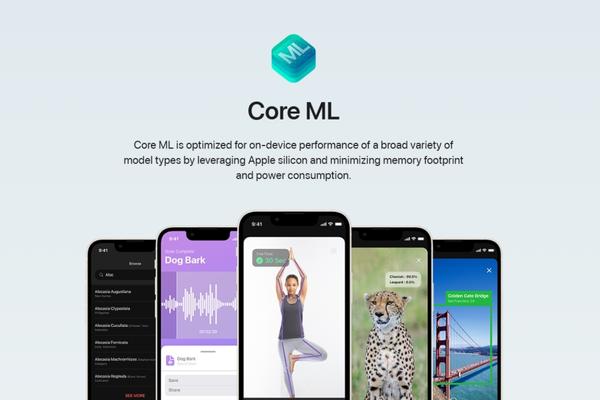

2. Core ML

What it is:

Core ML, Apple’s machine learning framework. It is specifically designed to enable AI applications in iOS apps. It was created specifically for iPhone and iPad apps, allowing programmers to take advantage of powerful machine learning instead of having to deal with ontological complexities.

Key Features & Benefits:

- Native Integration: Core ML seamlessly integrates with Swift and Xcode, eliminating the need for complex workarounds. This straightforward integration process ensures that you can easily incorporate AI features into your app.

- Versatile ML Tasks: Core ML empowers you with a wide range of machine learning functions, from image classification to text analysis and even sound recognition. This flexibility allows you to customize the AI to fit your app’s unique needs perfectly.

- Efficient Performance: Core ML is meticulously optimized to run efficiently on iOS devices, ensuring that your app can perform machine learning tasks quickly without draining the battery. This high level of performance is perfect for apps that require real-time AI processing.

- Privacy: Like TensorFlow Lite, Core ML processes everything on-device, keeping user data private. This means that sensitive data, such as health records or financial information, never leaves the user’s device, ensuring maximum privacy and security. This is a huge advantage for apps dealing with sensitive information.

- Low Power Consumption: One of the standout features is its minimal impact on power. Your app can run smoothly with AI features without worrying about battery life on iPhones or iPads.

Pricing:

Core ML is free of charge, as it is part of the suite of tools Apple provides to developers. If you have already begun developing for Android, integrating machine learning into your apps will not entail additional costs.

Workflow:

- Model Creation: You can train a model using Create ML, Apple’s no-code tool, or convert a pre-existing model (like TensorFlow or PyTorch) to Core ML’s .mlmodel format using Core ML Tools. This process involves understanding the structure of the model, its input and output, and the type of data it can process.

- Compilation: Once the model is ready, you compile it using Xcode or the command line to convert the model into a format that works on iOS devices.

- Integration: Add the compiled .mlmodel file to your app’s bundle, making it easy to incorporate the machine learning model into your app’s code.

- Runtime Inference: Use Core ML’s APIs in Swift or Objective-C to load the model and use it to make predictions directly on the device. This makes your app AI-powered in no time!

Use Case:

Core ML was able to get the app TechCrunch building. The voice recognition worked with voice-to-text perfectly across all iOS devices, and the integration was seamless for users. If you need to add voice commands or image recognition facilities to an application, Core ML gives you many sophisticated options. It can also be used for tasks like sentiment analysis in social media apps or fraud detection in financial apps. Thus, it has great promise.

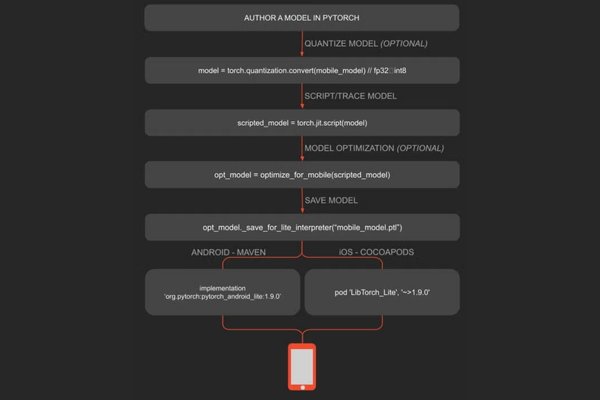

3. PyTorch Mobile

What it is:

PyTorch Mobile, a versatile runtime, empowers you to run PyTorch models directly on mobile devices. Its adaptability allows you to incorporate custom AI models, making it a perfect fit for deep learning tasks. Whether your focus is on Android or iOS, PyTorch Mobile gives you the power to bring AI to your users without relying on cloud processing.

Key Features:

- Cross-Platform Support: PyTorch Mobile works on both Android and iOS so that you can build apps for a wide range of users.

- Efficient Model Inference: PyTorch Mobile, with its support for hardware acceleration using mobile GPUs and NPUs, ensures efficient model inference. This efficiency is particularly beneficial for real-time tasks like image recognition or language translation, instilling confidence in the speed and performance of your app.

- Deep Learning Ready: PyTorch Mobile is fully equipped to handle complex tasks like natural language processing (NLP) and image recognition, providing reassurance for apps that need to process large amounts of data or make intelligent decisions.

- Seamless Integration: It fits well into your existing mobile development workflows. PyTorch Mobile offers APIs for both iOS and Android, making it easy to add machine learning models to your app.

- Customizable Models: You can use pre-trained models or create custom models. This flexibility lets you add the specific AI features your app needs.

The PyTorch Mobile Workflow:

- Model Training: Start by training your model using PyTorch on your local machine or a server. You can use prebuilt models or create your own from scratch.

- Model Optimisation: After training, optimise your model for mobile devices. This often includes quantisation, which reduces the model’s size and helps it run more efficiently on mobile.

- Model Conversion: Convert your model to TorchScript with PyTorch’s built-in functions like torch.jit.script() or torch.jit.trace(). This makes the model mobile-friendly.

- Integration into the App: Add the TorchScript model to your mobile app. Use PyTorch Mobile’s APIs for iOS or Android to load and run the model on the device.

- On-Device Inference: Use the device’s GPU or NPU for fast, efficient processing. The app can now process data on the device, providing immediate responses without cloud reliance.

Pricing

PyTorch Mobile is completely free. It’s open-source, so you can integrate AI into your app without any cost for the framework itself. However, if you use cloud services or specialized hardware for certain tasks, those may come with additional costs. But remember, these costs are separate from PyTorch Mobile.

Use Case:

PyTorch Mobile to add a custom NLP model for text classification. It worked great on both Android and iOS. The app processed text in real-time and gave users instant feedback. The app was fast, efficient, and didn’t need cloud services to work.

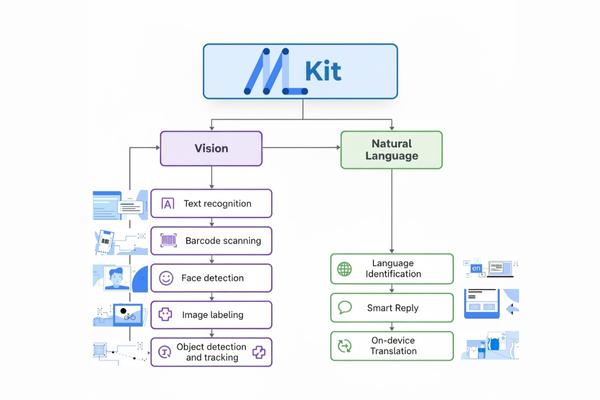

4. Google ML Kit

What it is:

Google’s ML Kit is a cross-platform SDK designed to bring machine learning to your mobile apps. It includes prebuilt models and easy-to-use APIs for adding features like face detection, text recognition, and barcode scanning. If you’re looking for quick and straightforward AI functionality, this is a great choice.

Key Features:

- Prebuilt APIs for Quick Integration: ML Kit offers ready-to-use APIs for tasks like face detection, barcode scanning, and text recognition. Just a few lines of code will give your app these powerful features that take advantage of all that machine learning has to offer.

- Cross-Platform Support: Whether you’re building for Android or iOS, ML Kit works seamlessly across both platforms. This helps you reach more users without having to build separate solutions.

- On-device Processing: Processing happens directly on the device, ensuring faster results and better privacy. Plus, your app works even when offline, making it ideal for real-time tasks and areas with poor connectivity.

- Custom Model Support: While ML Kit comes with prebuilt models, you can also use your own TensorFlow Lite models. You’re free to create your own custom AI Features for the app that will fit its needs perfectly with this flexibility.

Types of APIs:

- Face Detection: Detect faces in photos or live camera streams, even in low light.

- Text Recognition: Extract text from images in multiple fonts and languages. Great for document scanning or text translation.

- Barcode Scanning: Read barcodes and QR codes—perfect for retail, inventory, or payment apps.

- Object Detection and Tracking: Identify and track objects in real-time. Ideal for augmented reality apps or any app that uses the camera.

Pricing:

Google ML Kit is free to use. It’s part of Google’s suite of tools, so you don’t have to pay to integrate the APIs. However, if you use Google Cloud services for extra storage or advanced machine learning, there may be additional costs. But the core functionality remains free.

Use Case:

In a recent project, I added barcode scanning to a retail app using ML Kit. The setup was quick, and the scanner worked perfectly, even in low light. This feature significantly improved the user experience and made the checkout process faster and easier.

5. Create ML

What it is:

Create ML is a tool from Apple that helps developers easily train custom machine learning models for iOS apps. If you want to add machine learning features without complex coding, this tool is perfect. With its no-code interface, you can easily build models for various tasks, even if you’re new to machine learning.

Key Features:

- Intuitive Interface: Create ML offers a drag-and-drop interface. It’s simple to use, and no coding is required. This makes it beginner-friendly, so anyone can jump in and start building models.

- Powerful Model Creation: It takes full advantage of Apple’s M1 chip for fast model training. Even with its easy interface, Create ML can handle complex tasks like image recognition and personalized recommendations.

- Diverse Model Types: Create ML supports a wide range of models for different tasks. You can use it for:

- Image Classification: Categorise images into groups.

- Text Classification: Sort text by topic or sentiment.

- Sound Classification: Recognize various sounds.

- Motion Classification: Detect gestures or activities.

- Tabular Data: Predict outcomes based on structured data (e.g., CSV files).

How It Works:

- Data Collection: Gather your data (images, text, or sound) and organize it for Create ML to use during training.

- Model Selection: Open Create ML in Xcode, choose the model type (e.g., image classification), and upload your data.

- Training: Create ML uses your data to train the model. It automatically adjusts and optimizes the model to give the best performance.

- Evaluation: After training, test the model’s performance. You can check its accuracy and make improvements if needed.

- Export and Integration: Once satisfied, export the trained model as a .mlmodel file. Then, integrate it into your iOS app using Core ML.

Limitations:

- Platform Exclusivity: Create ML is only available for macOS and works with Xcode. Developers using other platforms can’t access it.

- Limited Customization: While Create ML is great for many tasks, it may not be ideal for complex models or those needing highly specific customisation.

- Resource Intensive: Training large models can demand a lot of CPU and GPU power. This can slow down other tasks on your machine.

Pricing:

Create ML is free. It’s included with Xcode, so you only need a macOS device to get started—no extra costs involved.

Use Case:

Create ML to build a custom recommendation engine for an iOS app. The setup was quick and easy, and the model worked perfectly to deliver personalized content to users. It’s an excellent tool for developers who want to add machine learning without dealing with complicated code.

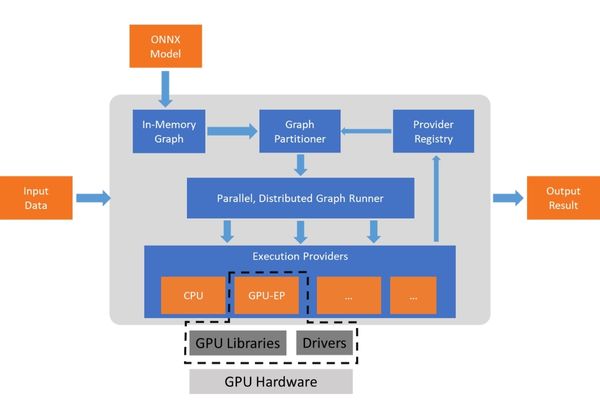

6. ONNX Runtime Mobile

What it is:

ONNX Runtime Mobile is a lightweight framework that allows you to run machine learning models directly on mobile devices. It’s cross-platform, so it works with models trained in PyTorch, TensorFlow, or other popular frameworks. This makes it perfect for developers who need to deploy models on both Android and iOS.

Key Features:

- Cross-Framework Compatibility: ONNX Runtime Mobile supports models trained in PyTorch and TensorFlow. This makes it easy to use existing models and integrate them into your app.

- Optimized for Mobile Devices: It is optimised for mobile hardware, so it uses less power and runs efficiently. This is crucial for real-time applications that must work smoothly without draining the device’s battery.

- Supports Both Android and iOS: Whether you’re developing for Android or iOS, ONNX Runtime Mobile works seamlessly on both platforms. This saves time and eliminates the need to create separate codebases for each platform.

How It Works:

- Model Conversion: Convert your models from PyTorch or TensorFlow into the ONNX format for compatibility with ONNX Runtime Mobile.

- Optimization: Once in the ONNX format, optimise your model for mobile use. This involves reducing the model’s size to ensure it performs well on mobile devices.

- Integration: In tegrate the optimized model into your app using the ONNX Runtime Mobile API. It’s an easy process that ensures everything runs smoothly.

- Inference: After integration, the model can process data and make predictions directly on the device. It works in real-time, offering fast and efficient results.

Use Cases:

- Real-time Audio Processing: ONNX Runtime Mobile can process audio in real-time, making it perfect for tasks like noise cancellation and speech enhancement.

- Image Recognition: It works well for object detection and facial recognition. Whether you’re building a security app or a social platform, it provides fast and efficient on-device image analysis.

- Natural Language Processing (NLP): It supports NLP tasks such as sentiment analysis and text classification, allowing apps to process and analyze text locally, improving both speed and privacy.

Pricing:

ONNX Runtime Mobile is free to use as part of the ONNX Runtime framework. No additional costs for inference on mobile devices, although using cloud services or specialized hardware for training may incur separate costs.

The model ran flawlessly on both Android and iOS, delivering fast, real-time predictions. The easy integration and cross-platform support made the development process much quicker.

7. MediaPipe

What it is:

MediaPipe is a powerful framework by Google that allows you to build real-time machine learning pipelines. It is particularly known for computer vision tasks, such as pose estimation, hand tracking, and augmented reality (AR) applications. MediaPipe simplifies the integration of AI into mobile apps, offering robust solutions for developers who need quick and efficient results.

Key Features:

- Prebuilt Models for AR and Computer Vision Tasks: MediaPipe offers several prebuilt models that handle common tasks like face detection, hand tracking, and pose estimation. These models save time by providing out-of-the-box solutions for developers working on AR and computer vision.

- Cross-Platform Compatibility: Whether you’re working with Android or iOS, MediaPipe is designed to run seamlessly on both platforms. This ensures that your apps can offer real-time AI features regardless of the device.

- Easy Integration with Other ML Frameworks: MediaPipe works well alongside other machine learning frameworks, allowing you to integrate it smoothly into your existing workflow. Whether you’re using TensorFlow or another tool, you can easily add MediaPipe into the mix.

How It Works:

- Pipeline Creation: You create a custom pipeline by connecting different MediaPipe components. These components could involve tasks like image preprocessing, landmark detection, and inference.

- Data Processing: Input data (like video frames or images) flows through the pipeline in real time. The models process the data on the device, making it ideal for applications that need instant feedback.

- Real-Time Output: After processing, the output can be used in your app. For example, detected hand landmarks can be used for gesture control, or face landmarks can enable facial recognition for AR features.

Use Cases:

- Real-Time Hand Tracking: MediaPipe excels in real-time hand tracking, making it perfect for AR apps that need to detect hand gestures. It’s incredibly efficient and can track hands with high accuracy, even in low-light conditions.

- Pose Estimation for Fitness Apps: By using MediaPipe for pose estimation, I was able to integrate real-time body tracking into a fitness app. The app could give users feedback on their posture and movements, improving their workout experience.

- Augmented Reality (AR) Integration: If you’re building an AR app, MediaPipe’s face detection and landmark tracking features make it much easier to create realistic virtual effects or filters that track the user’s face or body movements.

Pricing:

MediaPipe is free to use, and it’s open-source. You won’t face any costs for running MediaPipe in your app, making it accessible for all developers, regardless of their project size or budget.

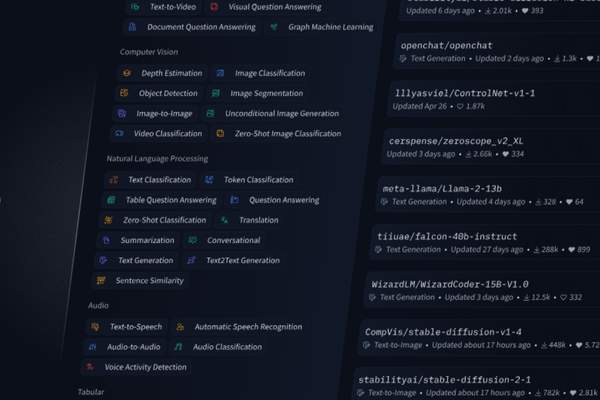

8.Hugging Face Transformers (Mobile-friendly)

What It Is:

Hugging Face makes powerful NLP models like BERT and GPT that are optimized for mobile. These models can do tasks like text classification, sentiment analysis, and text generation. With these models, you can add smart AI to your apps. This lets you process text in real-time, anywhere.

Key Features:

- Access to a Vast Model Library: Hugging Face offers a large selection of pre-trained models for text, audio, and vision. This makes it easy to choose the right model for your app.

- Fine-Tuning Capabilities: You can fine-tune models with your own data. This helps you get the best performance and accuracy for your specific app.

- Lower Costs and Environmental Impact: Hugging Face’s models use less power on mobile devices. This saves money and helps the environment, making them a smart choice for developers.

- Easy-to-Use Pipelines: Hugging Face has simple pipelines for tasks like text classification. You don’t need to be an expert in machine learning to use them.

- Framework Interoperability: Hugging Face models work with TensorFlow, PyTorch, and ONNX. You can use them with whatever tools you already have in your app.

- Multimodal Support: Hugging Face supports different types of models, including:

- Audio: Models like Whisper for speech recognition.

- Vision: Models for image recognition and object detection.

- Text: NLP models like BERT and GPT for tasks such as sentiment analysis and text generation.

Mobile Integration Strategies:

- On-Device Deployment: Deploying Hugging Face models on mobile devices allows fast, offline processing. This means your app works without an internet connection, giving users a smooth experience.

- Cloud-Based Deployment: For larger models, you can offload processing to the cloud. This helps keep your app’s performance high without using too many resources on the device.

- Edge Computing: Running models on edge devices reduces delays and improves performance. This approach processes data closer to the source, reducing the need for cloud services.

Pricing:

Hugging Face offers both free and paid options. Many pre-trained models are available for free, but advanced models or additional features, like fine-tuning, may require a paid subscription.

9. Edge Impulse

What It Is:

Edge Impulse helps you build machine learning models for mobile and embedded devices. This is particularly important in real-time IoT and sensor-based systems, where microseconds count. With Edge Impulse, you can create smart applications which run directly on devices, which not only speeds performance but also lessens reliance on clouds.

Key Features:

- Integrated Platform: Edge Impulse combines everything into one place. It helps you collect data, train models, optimize them, and deploy them all in one easy workflow.

- Data Acquisition: You can easily collect data from IoT sensors and devices. Edge Impulse supports many data types, making it simple to gather what you need.

- Automated Machine Learning (AutoML): Edge Impulse picks and fine-tunes models for you. It speeds up the process and doesn’t need expert knowledge to get good results.

- Model Optimization: The platform helps you make models that run efficiently on low-power devices. This ensures smooth performance even on small devices.

- Customizable ML Pipelines: You can create your own machine learning workflows. This gives you full control over how data is processed and models are trained.

- Diverse Model Support: Edge Impulse supports many models, such as classification, regression, and anomaly detection. This lets you pick the best one for your app.

- Broad Deployment Options: You can deploy models to a wide range of devices. From microcontrollers to more powerful devices, it scales with your needs.

- Real-Time Testing: Test your models in real-time. See how they perform and make quick adjustments before deploying them.

- Multi-Object Detection (FOMO): FOMO allows the detection of multiple objects in real-time. It’s great for applications like surveillance and robotics.

Typical Workflow:

- Data Collection: Gather data from IoT sensors, wearables, or other devices using Edge Impulse’s tools.

- Model Training: Use AutoML to train models on your collected data. Edge Impulse chooses and fine-tunes the best model for your use case.

- Model Optimization: Optimise the trained models to run on edge devices with limited resources.

- Deployment: Deploy the models to edge devices, so they can process data and make decisions in real-time.

- Real-Time Testing: Test your models in real-world conditions. Edge Impulse provides immediate feedback for adjustments.

Pricing:

- Free Developer Plan: Perfect for small projects or solo developers. It gives you access to key features, including training models and basic deployment.

- Enterprise Plan: Ideal for larger teams or businesses. It includes priority support, more deployment options, and advanced analytics. Pricing is customized based on your needs.

10. BERT Mobile / TinyML Implementations

What It Is:

TinyML is a version of machine learning that runs on mobile devices with limited resources. It’s made for tasks like text classification and audio processing. TinyML works well when devices need to process data in real time but have limited memory or power.

Key Features:

- Optimized for Low-Resource Devices: TinyML is designed to run on devices with little memory and processing power. It makes machine learning accessible even on small devices like wearables or IoT sensors.

- Efficient for NLP, Image, and Audio Tasks: TinyML handles tasks like text analysis, image recognition, and sound processing. It allows mobile apps to run AI models directly on the device.

- Ideal for Smaller-Scale Mobile Apps: It’s perfect for mobile apps that don’t need a lot of computational power. Whether it’s a simple voice assistant or a fitness app, TinyML is efficient for these smaller applications.

- Frameworks for Implementation:TensorFlow Lite: This lightweight version of TensorFlow is built for mobile devices. It makes it easy to run TinyML models on smartphones and other small devices.

- ONNX Runtime: ONNX provides a flexible platform for running machine learning models across devices. It supports various hardware and software configurations for mobile and embedded systems.

- PyTorch Mobile: This framework helps deploy TinyML models on mobile devices. It’s ideal for developers who prefer PyTorch for their machine learning tasks.

Use Cases and Applications:

- Voice Assistants: TinyML can run speech recognition directly on mobile devices, making voice assistants faster and more responsive.

- Fitness Tracking: TinyML models can process data from wearables, such as heart rate or motion sensors, without relying on cloud services.

- Smart Home Devices: TinyML allows real-time data processing for IoT devices like smart cameras and sensors, helping them react to changes quickly.

- Audio Processing: TinyML can detect specific sounds or commands, such as “wake word” detection in smart speakers, all on the device. Optimization Techniques for Mobile and TinyML BERT.

- Quantization: Reduces the size of the model by converting weights to lower precision. This helps models run faster and use less memory.

- Pruning: Removes unnecessary weights in the model. This reduces the model size without losing too much accuracy.

- Knowledge Distillation: A smaller model is trained to mimic a larger one, making it faster and more efficient for mobile use.

TinyML offers full-stack developers the tools needed to integrate machine learning models into mobile applications. Developers can use frameworks like TensorFlow Lite to deploy models directly onto mobile devices, making AI accessible in any app.

How AI Tools Transform Mobile App Development

AI tools aren’t just for adding fancy features—they can also boost productivity, improve app performance, and personalize user experiences. Here’s how:

- Boosting productivity: AI tools can automate tasks, suggest improvements, and reduce manual coding, making development faster.

- Improving app quality: Whether it’s real-time predictions or content recommendations, AI helps make your app smarter and more responsive.

- Personalized user experiences: AI allows you to tailor the app experience to each user, making it more engaging and relevant.

Frequently Asked Questions (FAQ)

What are the best AI tools for mobile app development?

TensorFlow Lite, Core ML, and PyTorch Mobile are all great choices depending on your needs.

Which AI tools are best for iOS development?

Core ML and Create ML are ideal for iOS app development.

Can AI tools help with backend development?

Yes! Tools like ONNX Runtime Mobile and PyTorch Mobile are great for backend AI tasks.

Conclusion: Choosing the Right AI Tool for Your Mobile App Development Needs

In the end, the best AI tool for your mobile app development depends on your specific needs, whether it’s platform compatibility, machine learning requirements, or user experience. The key is experimenting and finding what works best for your project.

Leave a Reply